In this post, with guest co-writers Edwin Biemond (@biemond) and Joel Nation (@joelith), we will explore virtualization with Docker. You may have heard of Docker, it is getting a lot of interest lately, especially with the recent announcement that Google are using it in their cloud service. What Docker does is that it allows you to create reusable ‘containers’ with applications in them. These can be distributed, will run on several platforms, and are much smaller than the ‘equivalent’ virtual machine images. The virtualization approach used by Docker is also a lot more lightweight than the approach used by hypervisors like VMWare and VirtualBox.

The motivation for looking at Docker is twofold. Firstly, there are a lot of virtual machines images created for training purposes, e.g. for SOA Suite. These are then distributed to Oracle folks and partners around the world. They tend to be in the 20-40 GB range in terms of size. This means that downloading them takes time, unzipping them takes time, and you need to have plenty of space to store them and so on. Publishing updates to these images is hard, and in reality means you need to go download them again. It would be nice to have a better way to distribute pre-built environments like this – a method that allowed for much smaller downloads, and the ability to publish updates easily, without sacrificing the control over the configuration of the environment – so that you still know what you are going to end up with when you start up the ‘image’.

Secondly, as many of you know, I have a strong interest in Continuous Delivery and automation of the build-test-release lifecycle. Being able to quickly create environments that are in a known state, to use for testing automation, is a key capability we would want when building a delivery pipeline.

Docker provides some capabilities that could really help in both of these areas. In this post, we are just going to focus on the first one, and while we are exploring, let’s also look at how well Docker integrates with other tools we care about – like Vagrant, Chef and Puppet for example.

Introducing Docker

Docker is a virtualization technology that uses containers. A container is a feature that was added to the Linux kernel recently. Solaris has had containers (or ‘zones’) for a long time. A container is basically a virtual environment (like a VM) where you can run applications in isolation – protected from other applications in other containers or on the ‘host’ system. Unlike a VM, it does not emulate a processor and run its own copy of the operating system, with its own memory, and virtual devices. Instead, it shares the host operating system, but has its own file system, and uses a layering technology to overlay sparse file systems on top of each other to create its file system – you’ll see what this means in practice later on. When you are ‘in’ the container, it looks like you are on a real machine, just like when you are ‘in’ a VM. The difference is that the container approach uses a lot less system resources than the VM approach, since it is not running another copy of the operating system.

This means that more of your physical memory is available to run the actual application you care about, and less of it is consumed by the virtualization software and the virtualized operating system. When you are running VMs – this impact can be significant, especially if you need to run two or three VMs.

Containers are pretty mainstream – as we said, Solaris has had them for years, and people have been using them to isolate production workloads for a long time.

You can use Linux containers without using Docker. Docker just makes the whole experience a lot more pleasant. Docker allows you to create a container from an ‘image’, and to save the changes that you make, or to throw them away when you are done with the container.

These images are versioned, and they are layered on top of other images. So they are reusable. For example, if you had five demo/training environments you wanted to use, but they all have SOA Suite, WebLogic, JDK, etc., in them – you can put SOA Suite into one image, and then create five more images for each of the five demo/training environments – each of these as a layer on top of the SOA image. Now if you had one of those five ‘installed’ on your machine and you wanted to fire up one of the others, Docker allows you to just pull down that relatively small demo image and run it right on top of the relatively large SOA image you already have.

If you customize the demo and want to share the customizations with others, you can ‘push’ your image back to a shared registry (repository) of images. Docker is just going to push the (relatively very small) changes you made, and other people can then use them by running that image on top of the ones they already have.

Running your customized image on top of the others they have will not change the others, so they can still use them any time they need them. And this is done without the need for the relatively large ‘snapshots’ that you would create in a VM to achieve the same kind of flexibility.

Probably the best way to learn about Docker is to try it out. If you have never used container-based virtualization before, things are going to seem a little weird, and it might take a while to get it straight in your head. But please do persevere – it is worth it in the long run.

To follow along with this post you need a Ubuntu 14.04 desktop environment. Inside a VM/VirtualBox is fine for the purposes of learning about Docker. Just do a normal installation of Ubuntu.

Optional Section – Vagrant

Or, if you prefer not to install from scratch, you can follow the following vagrant steps

First install vagrant ( http://vagrantup.com/ ) and virtualbox. Then create a directory to hold your Ubuntu image.

# mkdir ubuntu-desktop # cd ubuntu-desktop/ # vagrant init janihur/ubuntu-1404-desktop

Before you start the image edit the Vagrantfile and uncomment the following section to assign some more memory to the Virtualbox image:

config.vm.provider "virtualbox" do |vb| # Don't boot with headless mode vb.gui = true # Use VBoxManage to customize the VM. For example to change memory: vb.customize ["modifyvm", :id, "--memory", "4096"] end

Start the image with:

# vagrant up

This will download ubuntu 14.04 with a disk space of 100GB and the installed desktop is LXDE based Lubuntu.

To authenticate you can use vagrant/vagrant, vagrant can also sudo without a password.

For more info see https://bitbucket.org/janihur/ubuntu-1404-server-vagrant

(End optional section)

Once you have a Ubuntu VM (or native install), then install docker:

(as root) # curl -sSL https://get.docker.com/ubuntu | /bin/sh

This will download the apt registry keys for Docker and install the Docker program. After it is done you can run ‘docker -v’ and it should report version 1.3.1 (at the time of writing).

For the rest of this post, we will display prompts as follows:

host# - indicating a command you type on the host system (as root) container# - indicating a command you type inside the container (as root) container$ - indicating a command you type inside the container (as a non-root user)

Now, let’s explore some of the basics.

Basic Docker Usage

The first obvious thing to do is to start up a container and see what it looks like. Let’s fire up an empty Ubuntu 14.04 container:

host# docker run ubuntu:14.04 ls

Since this is likely the first time you are using this ubuntu:14.04 container, it will be downloaded first then started up. You will see some output like this:

bin boot ... var

That’s just the output of running the ‘ls’ command inside a container that we just created. When the ‘ls’ command finished, the container stopped too. You can see its remnants using the following command (your container ID and name will be different):

host# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3f67ac6342d8 ubuntu:14.04 "ls" 6 seconds ago Exited (0) 5 seconds ago hungry_mcclintock

Also, we downloaded that ubuntu:14.04 image – you can see your images using this command:

host# docker images REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE ubuntu 14.04 5506de2b643b 2 weeks ago 199.3 MB

Now that was not terribly interesting – the container only ran for milliseconds. Notice there was no waiting for the operating system to boot! Let’s fire up another container and run a shell in it so we can look around. Try this command:

host# docker run -ti ubuntu:14.04 /bin/bash

This will start up a new container, attach a terminal to it (-t), make it interactive (-i) as opposed to running it in the background, and run /bin/bash. Almost instantly you will be sitting at the bash prompt. Take a look around – just don’t exit the shell yet. You will notice that the container has its own file system, but you cannot see out to the host file system. It looks very much like being inside a ‘traditional’ VM.

Create a file and put some content into it, for example:

container# date > myfile

Now let’s exit the container and take a look around from the outside.

container# exit

You will be back at your host Ubuntu system’s bash prompt now. Try the ‘docker ps- a’ command again. Notice you have another container now.

Let’s see what happened in that container. Grab the name or the hex ID of the container and try this command – substituting in the right ID or name:

host# docker diff abcd12345abc

Note: For the rest of this post, we will just put “ID” or “SOA_CONTAINER_ID” or something like that, since the ID’s that you get will be different to ours anyway. When you see “ID” just substitute in the correct container or image ID depending on the context.

It will show you all the files you have changed in the container! You can go ahead and start up a new container with the same command, and it will be just like the last one was when you started it – those changes you made will not be there. So you can fire up as many containers as you like and have them in exactly the same state each time – that is pretty useful for demos and training environments.

But what if you want to keep those changes? You just need to save them as a new image. Try these commands:

host# docker commit abcd12345abc host# docker images

Now you will see that you have a new image. It is probably pretty small, in fact if all you did was create that one new file, it will probably show as the same size as the ubuntu:14.04 image you created it from because the change was so small it was a rounding error in the output.

You can start a new container using your image – just grab the ID of the image and try a command like this:

host# docker run -ti ff77ff66ff55 /bin/bash

Again, you will be dropped into the bash prompt inside a new container. Take a look – your file is there. You can exit out of this container, and start another one using ubuntu:14.04 and it looks just like it did before.

Each image is essentially a delta/change set on top of the image it was based on. So you can build up layers of images, or you can create as many images as you like (say for different demos) on top of a common base image. These are going to be very small in size, and much easier to manage than trying to share a single VM for a bunch of demos, or trying to manage snapshots, or multiple copies of VMs. And since that common base image will be the underlying operating system (Ubuntu in our case) all the people you share your image with are likely to have that already downloaded.

Now we have been playing around a bit, and we have a bunch of old containers laying around. Let’s clean up a bit. You can remove the old containers using the command:

host# docker rm abcde12345abc

Just put in the appropriate ID’s that you got in the output of ‘docker ps -a’.

Let’s move on to creating some useful containers now that we have a feel for the basics.

Creating your own containers

Let’s create a container with some more useful things in it now. Later we are going to build a container with SOA Suite in it and one with the Oracle Database in it. There are a few common things we will want in both of those, so let’s set those up in a container now, and make a base image from that container which we can then use to create both the SOA and the database containers later on.

What do we want in our container?

- First we want an oracle user and group

- We want to be able to run the GUI install programs, so let’s install the X libraries we will need for that too

- We may as well put the JDK in there as well

Ok, we have a plan. So let’s go ahead and create the container. We start by firing up a new container from the base ubuntu:14.04 image that we used before:

host# docker run -ti ubuntu:14.04 /bin/bash

Now let’s create our users and groups:

container# groupadd oracle container# useradd -m -d /home/oracle -s /bin/bash -g oracle oracle container# passwd oracle (type password in twice)

And, let’s install the X libraries we need:

container# apt-get install libxrender-dev libxtst-dev

And then we can install the JDK – oh wait, how do we get the JDK installer into the container? We don’t have a browser in the container to download it with. Well, we could install a browser:

container# apt-get install firefox

But that will make our container a bit bigger and we don’t really need it. So let’s choose a different approach. Download the JDK using the browser on the host and put it in a directory on the host, let’s say /home/oracle/Downloads for example. Now we need to mount that directory in our container. Go ahead and exit the container:

container# exit

Now get it’s ID:

host# docker ps -a

Create a ‘work in progress’ image from that container:

host# docker commit -a “user” -m “installed X libs and created oracle user” ID host# docker images

Grab the ID of the new image, and fire up a new container:

host# docker run -ti -v /home/oracle/Downloads:/home/oracle/Downloads ID /bin/bash

This will drop you into the root bash shell in the container. Change to the oracle user:

container# su - oracle

Take a look in your Downloads directory – it is a mirror of the one on the host – and there is your JDK tar file. Let’s untar it into our home directory:

container$ cd container$ tar xzvf Downloads/jdk1.7.0_71.tar.gz

Great, now we have everything we wanted. Before we leave the container, let’s just remove any unnecessary files in /tmp (there probably aren’t any right now, but it is good to get into this practice to keep image sizes as small as possible).

container$ exit container# rm -rf /tmp/* container# exit

Now let’s save our changes. Get the container ID and make a new image:

host# docker ps -a host# docker commit -a “user” -m “installed JDK” ID host# docker images

There is our new image. Let’s give it a name so we don’t have to keep using that ID. Docker uses a tag and a version to name images. Let’s call ours “oracle-base” and version ‘“1.0”:

host# docker tag ID oracle-base:1.0 host# docker images

That’s a lot nicer!

If you want to keep things clean, you can remove the older images that we no longer need with a command like:

host# docker rmi ID

Note that is rmi with an “i” on the end – rmi is for images. rm (without the “i”) is for containers.

Now, before we go on to create our SOA and database images, let’s learn about some more interesting and useful features of Docker.

More Interesting Stuff

Now we are going to want to be able to get ‘in’ to our containers from the outside – so we are going to need to expose some ports. Let’s look at that first.

Publishing ports

Docker allows you to publish ports from the container – this means that a port on the host will be forwarded to the container. So if we have WebLogic in the container listening on port 7001, we can have Docker make that available as a port on the host. This is done by adding some more details when we start a container, for example:

host# docker run -ti -p 0.0.0.0:7001:7001 oracle-base:1.0 /bin/bash

This will start up a new container, using our base image, and will have the host listen to port 7001 on all interfaces, and forward any traffic to port 7001 in the container. You can publish as many ports as you like by adding multiple -p options. Later (when we have WebLogic Server installed) we will see this in action.

Sharing the X display

Although it is usually possible to get by without a GUI when we are installing and configuring the FMW software, it might just be easier with one. So let’s see how to share the host’s X display with a container. Again, this is done with extra details when starting a container, like this:

host# docker run -ti -p 0.0.0.0:7001:7001 -v /tmp/.X11-unix:/tmp/.X11-unix -e DISPLAY=$DISPLAY oracle-base:1.0 /bin/bash

So the trick is to set a new environment variable (using -e) and to share the special file by mounting with -v. A very big “thank you” to Fábio Rehm for publishing this method at http://fabiorehm.com/blog/2014/09/11/running-gui-apps-with-docker/

Having done this, when you launch an X program in a container with the X libraries installed in it, the window will appear on the host’s display. Try it out:

container# su - oracle container$ jconsole

Making images smaller

Since keeping images as small as possible is a key goal, it is worth knowing the following method to reduce the size of an image after you have deleted files from it. For example, suppose you just installed SOA Suite in your container. That will have created a bunch of files in /tmp. Suppose you then deleted them. Maybe your image is still 6GB in size. You can use this method to make it as small as possible:

host# docker export ID | docker import - new:name

The ‘docker export’ command works on (from) a container, there is also ‘docker save’ which works from an image – but ‘docker save’ will include all the layers, so it wont necessarily give you a smaller image.

This will create a new image called new:name that has exactly the same content but is as small as possible. When I did this on that 6GB image I installed SOA in, it went down to about 3GB.

Daemon Mode

The other useful thing to know about is ‘deamon mode’ – this lets you start up a container without it taking over your session – it will just run in the background. To do this, you just add the -d option. For example, this will start up our oracle-base container in the background:

host# docker run -dt oracle-base:1.0 /bin/bash

This is also useful when containers have a predefined command to run, then you do not need to even specify the command (like /bin/bash) – it will just start itself up. More on this later.

Setting up a private registry

Before long, you are going to want to share images with other people. Docker provides a mechanism to do this for public images. But if you have some of your own data or licensed software in the image, you might want to set up a private Docker registry. The fastest way to do this is to just fire up a Docker container with the registry in it, and map port 5000 to its host:

host# docker run -ti -p 0.0.0.0:5000:5000 --name registry-server registry

This will pull down the registry image from the Docker Hub (the public one) and start it up. You can check it is working by hitting the following url:

http://host.domain:5000/v1/search

Substitute in the correct name of your host. This will give you back a little bit of JSON telling you there are no images in the registry right now.

You can also search the registry using the docker command:

host# docker search host.domain:5000/name

Substitute the correct host name in, and change ‘name’ to the name of the image you want, e.g. oracle-base. Of course, your new registry will not have any images in it yet. So let’s see how to publish an image to the registry.

Publishing an image

Publishing an image is a two step process – first we tag it with the registry’s information, then we do a ‘push’:

host# docker tag ID host.domain:5000/oracle-base:1.0 host# docker push host.domain:5000/oracle-base:1.0

Docker will make sure any other images that this one depends on (layers on top of) are also in the registry.

Now, your friends can use that image. Let’s see how:

host# docker pull host.domain:5000/oracle-base:1.0 host# docker run -ti oracle-base:1.0 /bin/bash

Easy, hey!

It is also possible to distribute images as tar files (if you don’t have or want to have a registry). To do this, you just run export against a container to create the tar, and then import on the other end:

host# docker export ID > my.tar host# cat my.tar | docker import - ID

Building Fusion Middleware containers

Ok, so now we have learned what we need to know about Docker, let’s actually use it to create, publish and use some containers with Fusion Middleware in them.

Creating a container with WebLogic Server

Let’s start by creating a container with a simple WebLogic Server installation. Let’s assume that you have downloaded the WebLogic Server installer and you have it in your /home/oracle/Downloads directory.

Here is the command to start up the container:

host# docker run -ti -p 0.0.0.0:7001:7001 -v /tmp/.X11-unix:/tmp/.X11-unix -v /home/oracle/Downloads:/home/oracle/Downloads -e DISPLAY=$DISPLAY oracle-base:1.0 /bin/bash

And now for the installation:

container# su - oracle container$ java -jar /home/oracle/Downloads/fmw_12.1.3.0.0_wls.jar (follow through the installer and then the config wizard to create a domain) container$ exit container# rm -rf /tmp/* container# exit host# docker commit -a “user” -m “wls installed and domain created” ID host# docker images

Now, let’s create a container using that new image and fire up WLS:

host# docker run -ti -p 0.0.0.0:7001:7001 -v /tmp/.X11-unix:/tmp/.X11-unix -v /home/oracle/Downloads:/home/oracle/Downloads -e DISPLAY=$DISPLAY IMAGE_ID /bin/bash container# su - oracle container$ wlshome/user_projects/domains/base_domain/startWebLogic.sh

Now, go and fire up a browser on the host and point it to http://localhost:7001/console and you will see the WebLogic Server console from inside the container! Have a play around, and shut down the container when you are finished.

Just for good measure, let’s also publish this image for others to use:

host# docker tag ID host.domain:5000/weblogic:12.1.3 host# docker push host.domain:5000/weblogic:12.1.3

Creating a container with the oracle database

As well as creating your images manually, you can also automate the image creation using something called a Dockerfile. Let’s use this approach to create the database image, so we can see how that works too. We can also use tools like Chef and Puppet to help with the configuration. In this section, let’s use Edwin’s Puppet module for the Oracle Database.

With using a Dockerfile we are able to reproduce our steps and easily change something without forgetting something important. (Infrastructure as code). The Dockerfile will do all the operating system actions like installing the required packages, upload the software etc. Puppet or Chef will do the Oracle database provisioning. To do it all in a Dockerfile is probably too complex and will lead to some many layers ( every step in a Dockerfile is a layer) so it probably better to re-use already proven Puppet modules or Chef cookbooks.

Puppet and Chef have their own configuration files (Manifests and Recipes respectively) which invoke the Puppet Resources/Modules or Chef Cookbooks to actually perform the configuration. So when we want to change the Database service name, add some schemas or change some init parameters we can do this in a Puppet Manifest/Chef Recipe.

For the database we will install the Oracle Database Standard Edition(12.1.0.1). You can also XE but this can lead to some unexpected FMW issues and installing the Enterprise Edition is too much for our use case.

We start by creating a Dockerfile. The whole project can also be found here https://github.com/biemond/docker-database-puppet. Let’s take a look through our Dockerfile.

The first line or instruction of a Dockerfile should be the FROM command, which details what our starting or base image is. In this case we will use CentOS version 6 because this comes the closest to Oracle Enterprise Linux and version 7 is probably too new. These minimal images are maintained by CentOS and can be found here at the docker registry https://registry.hub.docker.com/_/centos/

FROM centos:centos6

The next step is installing the required packages. One of the first things we need to do, is to install the open source edition of Puppet or Chef and its matching librarian program which retrieves the required modules from Git or Puppet Forge (in Chef this is called supermarket).

In CentOS we will use yum for this.

# Need to enable centosplus for the image libselinux issue RUN yum install -y yum-utils RUN yum-config-manager --enable centosplus RUN yum -y install hostname.x86_64 rubygems ruby-devel gcc git RUN echo "gem: --no-ri --no-rdoc" > ~/.gemrc RUN rpm --import https://yum.puppetlabs.com/RPM-GPG-KEY-puppetlabs && \ rpm -ivh http://yum.puppetlabs.com/puppetlabs-release-el-6.noarch.rpm # configure & install puppet RUN yum install -y puppet tar RUN gem install librarian-puppet -v 1.0.3 RUN yum -y install httpd; yum clean all

Now we will upload the Puppet manifest using the ADD command. This will copy the file from your local to the docker filesystem.

ADD puppet/Puppetfile /etc/puppet/ ADD puppet/manifests/site.pp /etc/puppet/

Puppetfile contains all the required Puppet modules which will be downloaded from Git or Puppetforge by the librarian program we installed previously.

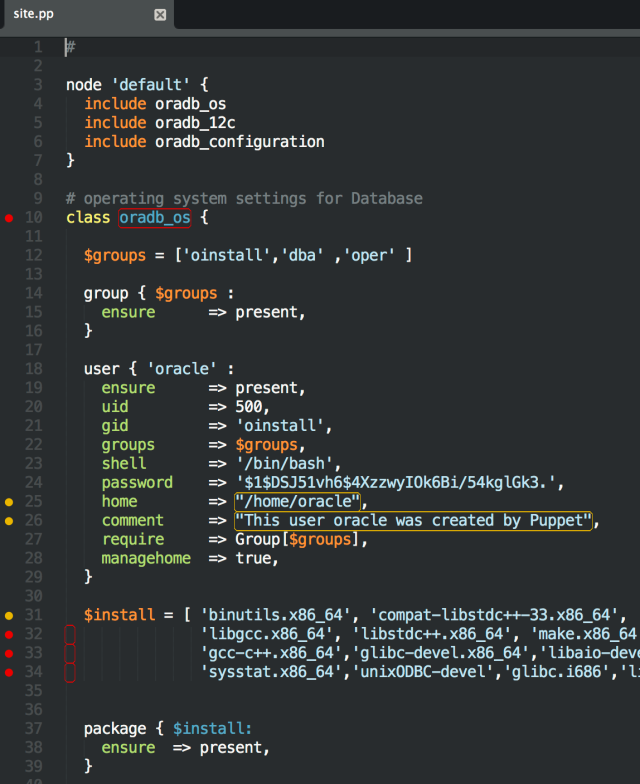

site.pp is the Puppet manifest which contains our configuration for the database installation.You can see a small fragment of the site.pp file below (https://github.com/biemond/docker-database-puppet/blob/master/puppet/manifests/site.pp). This will create the oracle user, the oracle database groups and the required database packages:

For more information about the Puppet Oracle database provisioning you can take a look at github. https://github.com/biemond/biemond-oradb

Back to the Dockerfile commands…

With the WORKDIR we can change the current directory ( like the Linux ‘cd’ command ) on the docker filesystem and startup librarian with the RUN command.

WORKDIR /etc/puppet/ RUN librarian-puppet install

Everything is ready to start Puppet but first we need to create some directories and upload the database software.

# upload software RUN mkdir /var/tmp/install RUN chmod 777 /var/tmp/install RUN mkdir /software RUN chmod 777 /software COPY linuxamd64_12c_database_1of2.zip /software/ COPY linuxamd64_12c_database_2of2.zip /software/

Time to start up puppet:

RUN puppet apply /etc/puppet/site.pp --verbose --detailed-exitcodes || [ $? -eq 2 ]

After this we can do a cleanup. For now this will not make our docker image smaller because every step is a separate layer but we can export/ import the container later which will significantly reduce the image

# cleanup RUN rm -rf /software/* RUN rm -rf /var/tmp/install/* RUN rm -rf /var/tmp/* RUN rm -rf /tmp/*

We also need to add a script which will start the dbora service (which is created by Puppet). This will startup the Oracle database and the Oracle Listener.

ADD startup.sh / RUN chmod 0755 /startup.sh

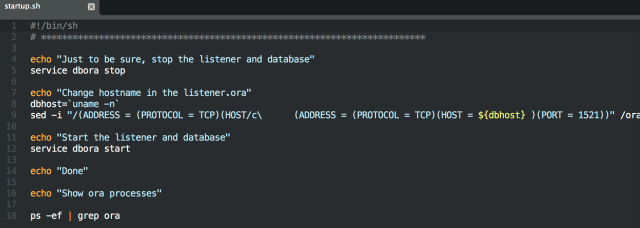

Here is a snippet of the startup.sh script:

The most important part of this script to determine the current hostname (you can’t have a fixed hostname because docker will change it every time you create a new container, also localhost won’t work when you try to connect from outside this container) and change the host in the listener.ora.

The last part of the Dockerfile is to add commands so this image will work well in daemon mode. We need to expose the Oracle database listener port and add a default command. This will first start /startup.sh and after this bash, so the container will keep on running after the startup.sh script.

WORKDIR / EXPOSE 1521 CMD bash -C '/startup.sh';'bash'

So we are ready to build the database image, we can do this with the docker build command.

First we need to change directory on the host to the location of the Dockerfile.

host# docker build -t oracle/database12101_centos6 .

This probably will generate an image of 13GB, so when you do this from a Docker VM make sure you have enough space. With export and import all the layers will be removed and this image will shrink to 7GB

To test our database image we can try to start a new container. This will use the default command specified in the Dockerfile.

host# docker run -i -t -p 1521:1521 oracle/database12101_centos6:latest

Or with /bin/bash we can start it ourselves

host# docker run -i -t -p 1521:1521 oracle/database12101_centos6:latest /bin/bash container# /startup.sh

Creating a container with SOA

Let’s continue by creating a container with a SOA Suite installation. This time, for contrast, we will use the manual approach. Let’s assume that you have downloaded the SOA Suite and FMW Infrastructure installers and you have them in your /home/oracle/Downloads directory.

Here is the command to start up the container:

host# docker run -ti -p 0.0.0.0:7001:7001 -v /tmp/.X11-unix:/tmp/.X11-unix -v /home/oracle/Downloads:/home/oracle/Downloads -e DISPLAY=$DISPLAY oracle-base:1.0 /bin/bash

And now for the installation:

container# su - oracle container$ java -jar /home/oracle/Downloads/fmw_12.1.3.0.0_infrastructure.jar (follow through the installer - when it fails the prereq test click on the Skip button) container$ java -jar /home/oracle/Downloads/fmw_12.1.3.0.0_soa.jar (follow through the installer - when it fails the prereq test click on the Skip button) container$ exit container# rm -rf /tmp/* container# exit host# docker commit -a “user” -m “soa installed” ID host# docker images

Now we are going to need to fire up a container with the database in it and one with SOA and connect them together before we start working on the RCU installation and create the SOA domain. Starting up multiple containers does not have the same kind of overhead that starting up multiple VMs does – remember the containers are not running their own copy of the operating system.

Linking containers

We want to start up the database container we created earlier, run it in the background (in daemon mode) and have it available for our SOA container to connect to. As part of this, we want to give it a name, we will use ‘db’:

host# docker run -dt --name db -p 1521:1521 oracle-db:12.1.0.1

Now we can start up our SOA container and connect it to the database container:

host# docker run -ti --name soa -p 7001:7001 -p 7004:7004 -e DISPLAY=$DISPLAY -v /tmp/.X11-unix:/tmp/.X11-unix --link db:db oracle-soa:12.1.3 /bin/bash

The linking will make the ‘db’ container visible to the ‘soa’ container as ‘db’ – without exposing any ports publicly, i.e. it puts them on a private network. So from the soa container, you can ‘ping db’ and see the db container.

Now we are ready to run RCU:

container# su - oracle container$ soahome/oracle_common/bin/rcu

Follow through the RCU wizard. The database connect string will be db:1521:orcl.

Now we can create a SOA domain.

container$ soahome/oracle_common/common/bin/config.sh

Follow through the config wizard to create the domain.

Before we go on, it would be a good idea to image the two containers. Exit from both containers then create images:

host# docker ps -a host# docker commit -a “user” -m “db with soa schemas” ID host# docker tag ID oracle-soa-db:12.1.3 host# docker commit -a “user” -m “soa with domain” ID host# docker tag ID oracle-soa:12.1.3

We gave the db container a new name ‘oracle-soa-db’ so we can use the empty database for other things too, but the SOA container we just update. You should tag and push them to your private registry for others to use as well.

Now you can fire up some containers using these new images, and link them, and start up the servers!

host# docker rm db host# docker run -dt --name db -p 1521:1521 oracle-soa-db:12.1.3 (db)container# ./startup.sh host# docker rm soa host# docker run -ti --name soa -p 7001:7001 -p 7004:7004 -e DISPLAY=$DISPLAY -v /tmp/.X11-unix:/tmp/.X11-unix --link db:db oracle-soa:12.1.3 /bin/bash (soa)container# su - oracle container# nohup soahome/user_projects/domains/soa_domain/startWebLogic.sh > adminserver.log & container# nohup soahome/user_projects/domains/soa_domain/bin/startManagedWebLogic.sh soa_server1 t3://localhost:7001 > soa_server1.log &

Now point a browser to http://host:7001/em and take a look around.

Create a container with SOA demo content

Now we have a nice reusable SOA image that we can use as the basis for a small ‘demo’ SOA environment. We are going to leave this as an exercise for you to try out by yourself! If you have followed through this post, you already know everything you need to do this.

Start up and link your two containers (if they are not still running). Then fire up JDeveloper and create a simple BPEL project and deploy it onto the SOA server. We won’t go through how to do this in detail – if you are reading this you will already know how to make a BPEL process and deploy it.

Once you have that done, shutdown the containers and commit the changes into new images:

host# docker ps -a host# docker commit -a “user” -m “my soa demo db” DB_CONTAINER_ID host# docker commit -a “user” -m “my soa demo” SOA_CONTAINER_ID

Now you can fire up containers from these two new images to use your demo environment, or you can fire up containers from the previous two images to go back to a clean SOA environment – perhaps to build another demo, or to do some training or POC work.

Conclusion

Well hopefully this has given you a good overview of Docker, not just the basics in abstract, but a concrete example of using Docker in a Fusion Middleware environment, and demonstrated creating and distributing images, linking images, the lightweight virtualization, and also some (bonus) integration with Vagrant, Chef and Puppet!

We think that Docker is a pretty good fit for the first scenario we described in the motivation section – creating, managing and distributing demo and training environments from a centralized repository, with smaller image sizes, faster downloads, lower overhead and more flexible updates compare to the way a lot of people do it today – with VM images.

Stay tuned to hear some thoughts and experiences about using Docker in a Continuous Delivery environment – but that’s another post!

Pingback: ReBlog: Getting to know Docker – a better way to do virtualization? | OFM Canberra

Pingback: Oracle Fusion Middleware with Docker | Erik Wramner

Great article. A great companion article (for folks who choose to go the Vagrant route when going hands on with this article) is at https://docs.docker.com/installation/ubuntulinux/. I faced a number of hiccups when I took the Vagrant approach, all of which the mentioned link solved.

Mark et. al., great solid article that teaches a lot – as always on your blog!

I think the killer feature of Docker its vendor independent format providing (almost perfect) location transparency. Creating containers locally, that can also run on the major clouds without any changes such as Amazon and Google is fabulous.

Supporting Docker with OEL and ksplice can be only the first step.

Would be cool to see Docker supported on Oracle Cloud soon 🙂

Excellent. Thank you it !

You should play with the WebLogic Docker project. The process to configure WebLogic is much easier by using Silent install, for example. 🙂

http://github.com/weblogic-community/weblogic-docker

Pingback: WebLogic Partner Community Newsletter December 2014 | WebLogic Community

Pingback: Getting to know Docker – a better way to do virtualization by Mark Nelson | WebLogic Community

Great work! Will be nice if you join your work with Bruno Borges’ effort on weblogic-docker repository.

I think Puppet (or Chef) is a better way to manage configuration, nice to see how you integrate these tools.

Btw, for linking and orchestrating containers I found Fig (http://fig.sh) very useful.

Best,

Jorge.

Thanks Mark..looking for such information since long time. It’s time for me to get some hands on.

Pingback: Docker – Take Two – Starting From Windows with Linux VM as Docker Host by Lucas Jellema | WebLogic Community